Statistical Power on:

[Wikipedia]

[Google]

[Amazon]

In

For a type II error probability of , the corresponding statistical power is 1 − . For example, if experiment E has a statistical power of 0.7, and experiment F has a statistical power of 0.95, then there is a stronger probability that experiment E had a type II error than experiment F. This reduces experiment E's sensitivity to detect significant effects. However, experiment E is consequently more reliable than experiment F due to its lower probability of a type I error. It can be equivalently thought of as the probability of accepting the alternative hypothesis () when it is true – that is, the ability of a test to detect a specific effect, if that specific effect actually exists. Thus,

If is not an equality but rather simply the negation of (so for example with for some unobserved population parameter we have simply ) then power cannot be calculated unless probabilities are known for all possible values of the parameter that violate the null hypothesis. Thus one generally refers to a test's power ''against a specific alternative hypothesis''.

As the power increases, there is a decreasing probability of a type II error, also called the ''false negative rate'' () since the power is equal to 1 − . A similar concept is the

For a type II error probability of , the corresponding statistical power is 1 − . For example, if experiment E has a statistical power of 0.7, and experiment F has a statistical power of 0.95, then there is a stronger probability that experiment E had a type II error than experiment F. This reduces experiment E's sensitivity to detect significant effects. However, experiment E is consequently more reliable than experiment F due to its lower probability of a type I error. It can be equivalently thought of as the probability of accepting the alternative hypothesis () when it is true – that is, the ability of a test to detect a specific effect, if that specific effect actually exists. Thus,

If is not an equality but rather simply the negation of (so for example with for some unobserved population parameter we have simply ) then power cannot be calculated unless probabilities are known for all possible values of the parameter that violate the null hypothesis. Thus one generally refers to a test's power ''against a specific alternative hypothesis''.

As the power increases, there is a decreasing probability of a type II error, also called the ''false negative rate'' () since the power is equal to 1 − . A similar concept is the

The sample size determines the amount of

The sample size determines the amount of

statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of ...

, the power of a binary hypothesis test

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis.

Hypothesis testing allows us to make probabilistic statements about population parameters.

...

is the probability that the test correctly rejects the null hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is d ...

() when a specific alternative hypothesis () is true. It is commonly denoted by , and represents the chances of a true positive

A false positive is an error in binary classification in which a test result incorrectly indicates the presence of a condition (such as a disease when the disease is not present), while a false negative is the opposite error, where the test result ...

detection conditional on the actual existence of an effect to detect. Statistical power ranges from 0 to 1, and as the power of a test increases, the probability of making a type II error by wrongly failing to reject the null hypothesis decreases.

Notation

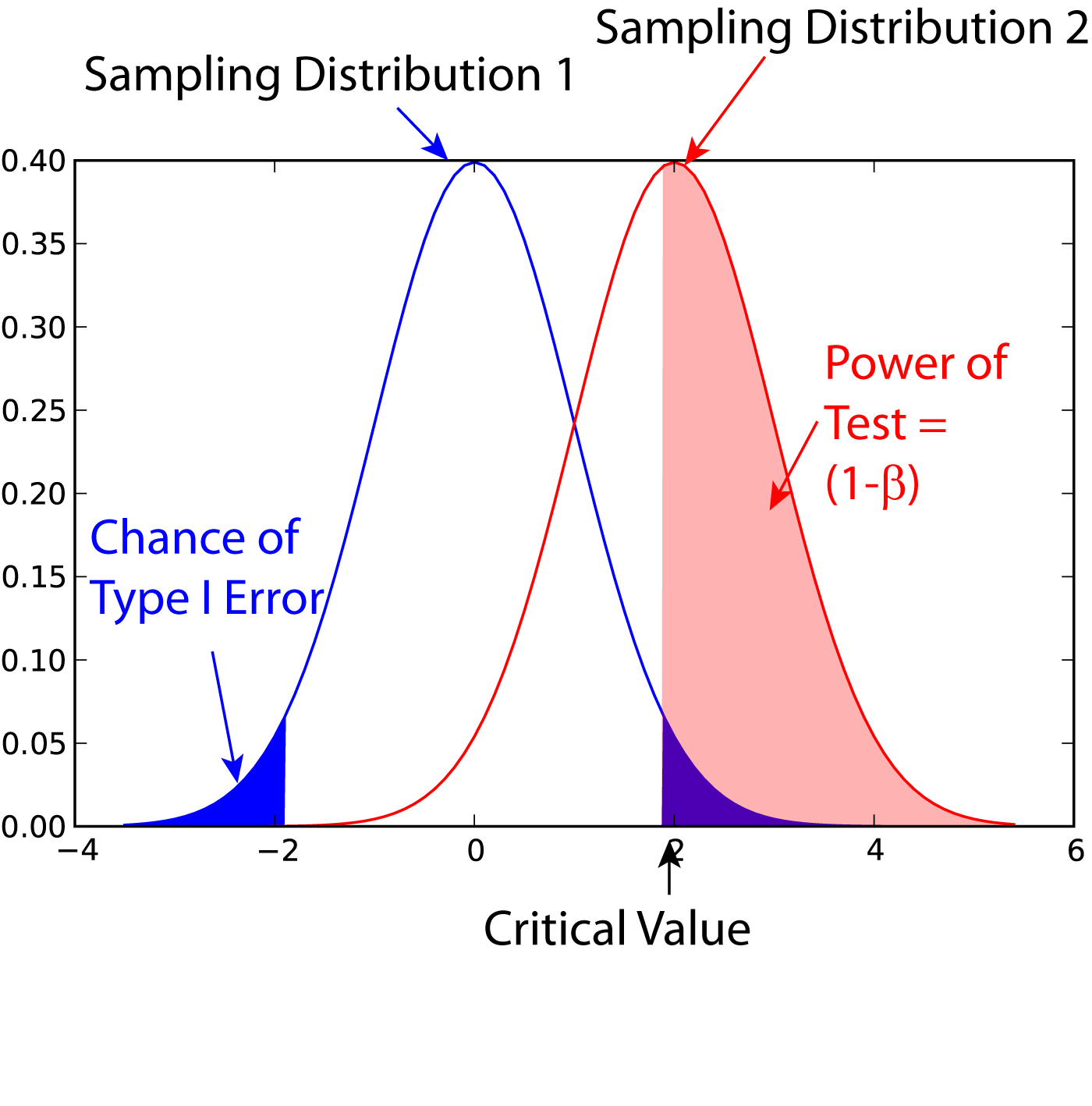

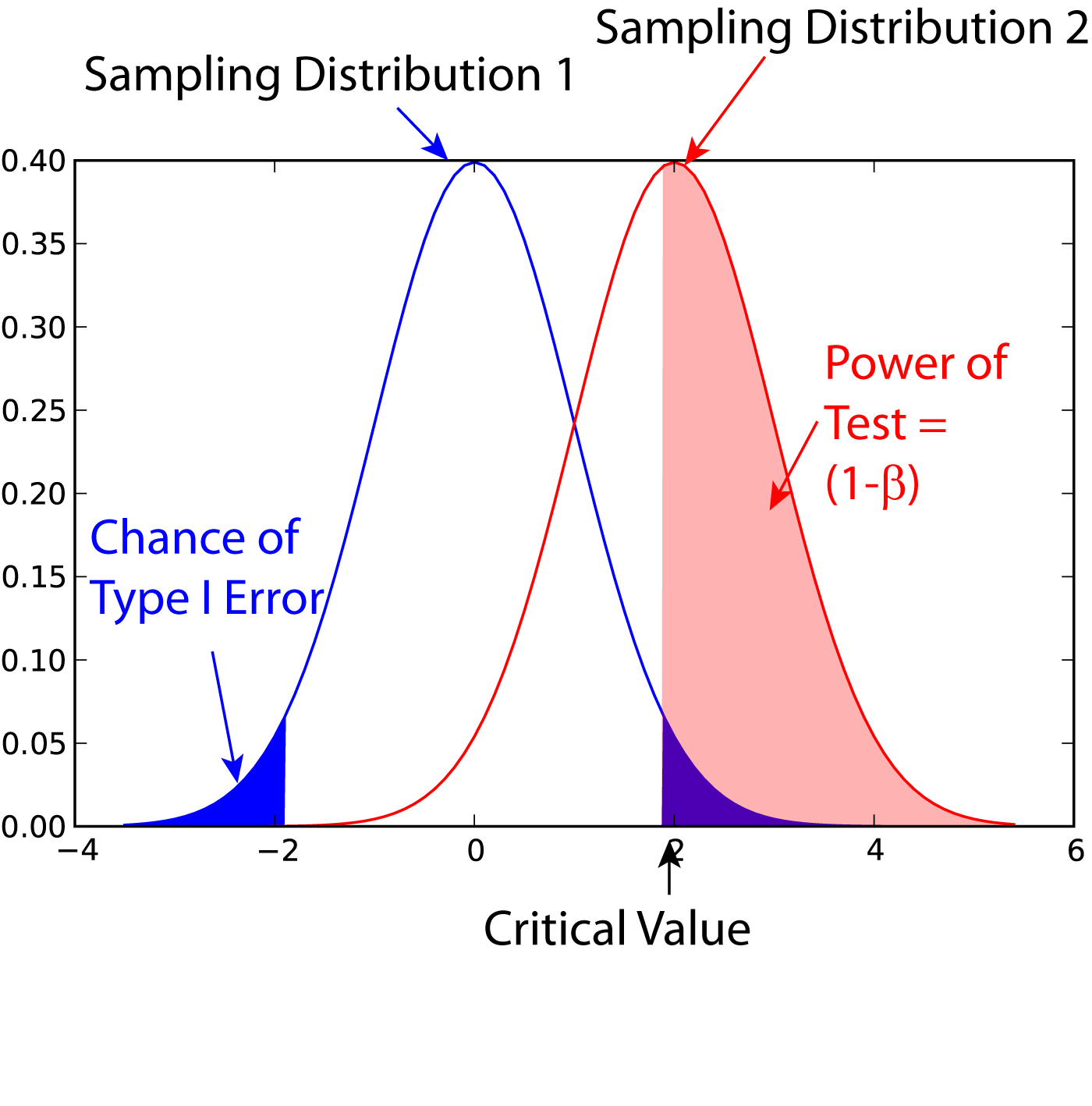

This article uses the following notation: * ''β'' = probability of a Type II error, known as a "false negative" * 1 − ''β'' = probability of a "true positive", i.e., correctly rejecting the null hypothesis. "1 − ''β''" is also known as the power of the test. * ''α'' = probability of a Type I error, known as a "false positive" * 1 − ''α'' = probability of a "true negative", i.e., correctly not rejecting the null hypothesisDescription

For a type II error probability of , the corresponding statistical power is 1 − . For example, if experiment E has a statistical power of 0.7, and experiment F has a statistical power of 0.95, then there is a stronger probability that experiment E had a type II error than experiment F. This reduces experiment E's sensitivity to detect significant effects. However, experiment E is consequently more reliable than experiment F due to its lower probability of a type I error. It can be equivalently thought of as the probability of accepting the alternative hypothesis () when it is true – that is, the ability of a test to detect a specific effect, if that specific effect actually exists. Thus,

If is not an equality but rather simply the negation of (so for example with for some unobserved population parameter we have simply ) then power cannot be calculated unless probabilities are known for all possible values of the parameter that violate the null hypothesis. Thus one generally refers to a test's power ''against a specific alternative hypothesis''.

As the power increases, there is a decreasing probability of a type II error, also called the ''false negative rate'' () since the power is equal to 1 − . A similar concept is the

For a type II error probability of , the corresponding statistical power is 1 − . For example, if experiment E has a statistical power of 0.7, and experiment F has a statistical power of 0.95, then there is a stronger probability that experiment E had a type II error than experiment F. This reduces experiment E's sensitivity to detect significant effects. However, experiment E is consequently more reliable than experiment F due to its lower probability of a type I error. It can be equivalently thought of as the probability of accepting the alternative hypothesis () when it is true – that is, the ability of a test to detect a specific effect, if that specific effect actually exists. Thus,

If is not an equality but rather simply the negation of (so for example with for some unobserved population parameter we have simply ) then power cannot be calculated unless probabilities are known for all possible values of the parameter that violate the null hypothesis. Thus one generally refers to a test's power ''against a specific alternative hypothesis''.

As the power increases, there is a decreasing probability of a type II error, also called the ''false negative rate'' () since the power is equal to 1 − . A similar concept is the type I error

In statistical hypothesis testing, a type I error is the mistaken rejection of an actually true null hypothesis (also known as a "false positive" finding or conclusion; example: "an innocent person is convicted"), while a type II error is the fa ...

probability, also referred to as the ''false positive rate'' or the level of a test under the null hypothesis.

In the context of binary classification

Binary classification is the task of classifying the elements of a set into two groups (each called ''class'') on the basis of a classification rule. Typical binary classification problems include:

* Medical testing to determine if a patient has c ...

, the power of a test is called its ''statistical sensitivity'', its ''true positive rate'', or its ''probability of detection''.

Power analysis

A related concept is "power analysis". Power analysis can be used to calculate the minimumsample size

Sample size determination is the act of choosing the number of observations or replicates to include in a statistical sample. The sample size is an important feature of any empirical study in which the goal is to make inferences about a populatio ...

required so that one can be reasonably likely to detect an effect of a given size

Size in general is the Magnitude (mathematics), magnitude or dimensions of a thing. More specifically, ''geometrical size'' (or ''spatial size'') can refer to linear dimensions (length, width, height, diameter, perimeter), area, or volume ...

. For example: "How many times do I need to toss a coin to conclude it is rigged by a certain amount?" Power analysis can also be used to calculate the minimum effect size that is likely to be detected in a study using a given sample size. In addition, the concept of power is used to make comparisons between different statistical testing procedures: for example, between a parametric test

Parametric statistics is a branch of statistics which assumes that sample data comes from a population that can be adequately modeled by a probability distribution that has a fixed set of parameters. Conversely a non-parametric model does not ass ...

and a nonparametric test

Nonparametric statistics is the branch of statistics that is not based solely on parametrized families of probability distributions (common examples of parameters are the mean and variance). Nonparametric statistics is based on either being distri ...

of the same hypothesis.Rule of thumb

Lehr's (rough) rule of thumb says that the sample size (each group) for a two-sided Two-sample t-test with power 80% () andsignificance level

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the ...

should be:

where is an estimate of the population variance and the to-be-detected difference in the mean values of both samples.

For a one sample t-test

A ''t''-test is any statistical hypothesis test in which the test statistic follows a Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of a ...

16 is to be replaced with 8.

The advantage of the rule of thumb is that it can be memorized easily and that it can be rearranged for . For strict analysis always a full power analysis shall be performed.

Background

Statistical test

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis.

Hypothesis testing allows us to make probabilistic statements about population parameters.

...

s use data from sample

Sample or samples may refer to:

Base meaning

* Sample (statistics), a subset of a population – complete data set

* Sample (signal), a digital discrete sample of a continuous analog signal

* Sample (material), a specimen or small quantity of s ...

s to assess, or make inferences

Inferences are steps in reasoning, moving from premises to logical consequences; etymologically, the word ''infer'' means to "carry forward". Inference is theoretically traditionally divided into deduction and induction, a distinction that in ...

about, a statistical population

In statistics, a population is a set of similar items or events which is of interest for some question or experiment. A statistical population can be a group of existing objects (e.g. the set of all stars within the Milky Way galaxy) or a hypoth ...

. In the concrete setting of a two-sample comparison, the goal is to assess whether the mean values

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value ( magnitude and sign) of a given data set.

For a data set, the ''arithm ...

of some attribute obtained for individuals in two sub-populations differ. For example, to test the null hypothesis that the mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value (magnitude and sign) of a given data set.

For a data set, the '' ari ...

scores of men and women on a test do not differ, samples of men and women are drawn, the test is administered to them, and the mean score of one group is compared to that of the other group using a statistical test such as the two-sample ''z''-test. The power of the test is the probability that the test will find a statistically significant difference between men and women, as a function of the size of the true difference between those two populations.

Factors influencing power

Statistical power may depend on a number of factors. Some factors may be particular to a specific testing situation, but at a minimum, power nearly always depends on the following three factors: * the statistical significance criterion used in the test * the magnitude of the effect of interest in the population * thesample size

Sample size determination is the act of choosing the number of observations or replicates to include in a statistical sample. The sample size is an important feature of any empirical study in which the goal is to make inferences about a populatio ...

used to detect the effect

A significance criterion is a statement of how unlikely a positive result must be, if the null hypothesis of no effect is true, for the null hypothesis to be rejected. The most commonly used criteria are probabilities of 0.05 (5%, 1 in 20), 0.01 (1%, 1 in 100), and 0.001 (0.1%, 1 in 1000). If the criterion is 0.05, the probability of the data implying an effect at least as large as the observed effect when the null hypothesis is true must be less than 0.05, for the null hypothesis of no effect to be rejected. One easy way to increase the power of a test is to carry out a less conservative test by using a larger significance criterion, for example 0.10 instead of 0.05. This increases the chance of rejecting the null hypothesis (obtaining a statistically significant result) when the null hypothesis is false; that is, it reduces the risk of a type II error (false negative regarding whether an effect exists). But it also increases the risk of obtaining a statistically significant result (rejecting the null hypothesis) when the null hypothesis is not false; that is, it increases the risk of a type I error (false positive).

The magnitude of the effect of interest in the population can be quantified in terms of an effect size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the ...

, where there is greater power to detect larger effects. An effect size can be a direct value of the quantity of interest, or it can be a standardized measure that also accounts for the variability in the population. For example, in an analysis comparing outcomes in a treated and control population, the difference of outcome means would be a direct estimate of the effect size, whereas would be an estimated standardized effect size, where is the common standard deviation of the outcomes in the treated and control groups. If constructed appropriately, a standardized effect size, along with the sample size, will completely determine the power. An unstandardized (direct) effect size is rarely sufficient to determine the power, as it does not contain information about the variability in the measurements.

The sample size determines the amount of

The sample size determines the amount of sampling error

In statistics, sampling errors are incurred when the statistical characteristics of a population are estimated from a subset, or sample, of that population. Since the sample does not include all members of the population, statistics of the sample ( ...

inherent in a test result. Other things being equal, effects are harder to detect in smaller samples. Increasing sample size is often the easiest way to boost the statistical power of a test. How increased sample size translates to higher power is a measure of the efficiency of the test – for example, the sample size required for a given power.

The precision with which the data are measured also influences statistical power. Consequently, power can often be improved by reducing the measurement error in the data. A related concept is to improve the "reliability" of the measure being assessed (as in psychometric reliability).

The design

A design is a plan or specification for the construction of an object or system or for the implementation of an activity or process or the result of that plan or specification in the form of a prototype, product, or process. The verb ''to design'' ...

of an experiment or observational study often influences the power. For example, in a two-sample testing situation with a given total sample size , it is optimal to have equal numbers of observations from the two populations being compared (as long as the variances in the two populations are the same). In regression analysis and analysis of variance

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician ...

, there are extensive theories and practical strategies for improving the power based on optimally setting the values of the independent variables in the model.

Interpretation

Although there are no formal standards for power (sometimes referred to as ), most researchers assess the power of their tests using = 0.80 as a standard for adequacy. This convention implies a four-to-one trade off between -risk and -risk. ( is the probability of a type II error, and α is the probability of a type I error; 0.2 and 0.05 are conventional values for and ). However, there will be times when this 4-to-1 weighting is inappropriate. In medicine, for example, tests are often designed in such a way that no false negatives (type II errors) will be produced. But this inevitably raises the risk of obtaining a false positive (a type I error). The rationale is that it is better to tell a healthy patient "we may have found something—let's test further," than to tell a diseased patient "all is well." Power analysis is appropriate when the concern is with the correct rejection of a false null hypothesis. In many contexts, the issue is less about determining if there is or is not a difference but rather with getting a more refinedestimate

Estimation (or estimating) is the process of finding an estimate or approximation, which is a value that is usable for some purpose even if input data may be incomplete, uncertain, or unstable. The value is nonetheless usable because it is de ...

of the population effect size. For example, if we were expecting a population correlation between intelligence and job performance of around 0.50, a sample size of 20 will give us approximately 80% power ( = 0.05, two-tail) to reject the null hypothesis of zero correlation. However, in doing this study we are probably more interested in knowing whether the correlation is 0.30 or 0.60 or 0.50. In this context we would need a much larger sample size in order to reduce the confidence interval of our estimate to a range that is acceptable for our purposes. Techniques similar to those employed in a traditional power analysis can be used to determine the sample size required for the width of a confidence interval to be less than a given value.

Many statistical analyses involve the estimation of several unknown quantities. In simple cases, all but one of these quantities are nuisance parameter

Nuisance (from archaic ''nocence'', through Fr. ''noisance'', ''nuisance'', from Lat. ''nocere'', "to hurt") is a common law tort. It means that which causes offence, annoyance, trouble or injury. A nuisance can be either public (also "common") ...

s. In this setting, the only relevant power pertains to the single quantity that will undergo formal statistical inference. In some settings, particularly if the goals are more "exploratory", there may be a number of quantities of interest in the analysis. For example, in a multiple regression analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one ...

we may include several covariates of potential interest. In situations such as this where several hypotheses are under consideration, it is common that the powers associated with the different hypotheses differ. For instance, in multiple regression analysis, the power for detecting an effect of a given size is related to the variance of the covariate. Since different covariates will have different variances, their powers will differ as well.

Any statistical analysis involving multiple hypotheses is subject to inflation of the type I error rate if appropriate measures are not taken. Such measures typically involve applying a higher threshold of stringency to reject a hypothesis in order to compensate for the multiple comparisons being made (''e.g.'' as in the Bonferroni method). In this situation, the power analysis should reflect the multiple testing approach to be used. Thus, for example, a given study may be well powered to detect a certain effect size when only one test is to be made, but the same effect size may have much lower power if several tests are to be performed.

It is also important to consider the statistical power of a hypothesis test when interpreting its results. A test's power is the probability of correctly rejecting the null hypothesis when it is false; a test's power is influenced by the choice of significance level for the test, the size of the effect being measured, and the amount of data available. A hypothesis test may fail to reject the null, for example, if a true difference exists between two populations being compared by a t-test

A ''t''-test is any statistical hypothesis test in which the test statistic follows a Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of ...

but the effect is small and the sample size is too small to distinguish the effect from random chance. Many clinical trial

Clinical trials are prospective biomedical or behavioral research studies on human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments (such as novel vaccines, drugs, diet ...

s, for instance, have low statistical power to detect differences in adverse effect

An adverse effect is an undesired harmful effect resulting from a medication or other intervention, such as surgery. An adverse effect may be termed a " side effect", when judged to be secondary to a main or therapeutic effect. The term compl ...

s of treatments, since such effects may be rare and the number of affected patients small.

''A priori'' vs. ''post hoc'' analysis

Power analysis can either be done before (''a priori'' or prospective power analysis) or after (''post hoc'' or retrospective power analysis) data are collected. ''A priori'' power analysis is conducted prior to the research study, and is typically used in estimating sufficient sample sizes to achieve adequate power. ''Post-hoc'' analysis of "observed power" is conducted after a study has been completed, and uses the obtained sample size and effect size to determine what the power was in the study, assuming the effect size in the sample is equal to the effect size in the population. Whereas the utility of prospective power analysis in experimental design is universally accepted, post hoc power analysis is fundamentally flawed. Falling for the temptation to use the statistical analysis of the collected data to estimate the power will result in uninformative and misleading values. In particular, it has been shown that ''post-hoc'' "observed power" is a one-to-one function of the ''p''-value attained. This has been extended to show that all ''post-hoc'' power analyses suffer from what is called the "power approach paradox" (PAP), in which a study with a null result is thought to show ''more'' evidence that the null hypothesis is actually true when the ''p''-value is smaller, since the apparent power to detect an actual effect would be higher. In fact, a smaller ''p''-value is properly understood to make the null hypothesis ''relatively'' less likely to be true.Application

Funding agencies, ethics boards and research review panels frequently request that a researcher perform a power analysis, for example to determine the minimum number of animal test subjects needed for an experiment to be informative. Infrequentist statistics

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or pr ...

, an underpowered study is unlikely to allow one to choose between hypotheses at the desired significance level. In Bayesian statistics

Bayesian statistics is a theory in the field of statistics based on the Bayesian interpretation of probability where probability expresses a ''degree of belief'' in an event. The degree of belief may be based on prior knowledge about the event, ...

, hypothesis testing of the type used in classical power analysis is not done. In the Bayesian framework, one updates his or her prior beliefs using the data obtained in a given study. In principle, a study that would be deemed underpowered from the perspective of hypothesis testing could still be used in such an updating process. However, power remains a useful measure of how much a given experiment size can be expected to refine one's beliefs. A study with low power is unlikely to lead to a large change in beliefs.

Example

The following is an example that shows how to compute power for a randomized experiment: Suppose the goal of an experiment is to study the effect of a treatment on some quantity, and compare research subjects by measuring the quantity before and after the treatment, analyzing the data using a pairedt-test

A ''t''-test is any statistical hypothesis test in which the test statistic follows a Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of ...

. Let and denote the pre-treatment and post-treatment measures on subject , respectively. The possible effect of the treatment should be visible in the differences which are assumed to be independently distributed, all with the same expected mean value and variance.

The effect of the treatment can be analyzed using a one-sided t-test. The null hypothesis of no effect will be that the mean difference will be zero, i.e. In this case, the alternative hypothesis states a positive effect, corresponding to The test statistic

A test statistic is a statistic (a quantity derived from the sample) used in statistical hypothesis testing.Berger, R. L.; Casella, G. (2001). ''Statistical Inference'', Duxbury Press, Second Edition (p.374) A hypothesis test is typically specifi ...

is:

where

is the sample size and is the standard error. The test statistic under the null hypothesis follows a Student t-distribution with the additional assumption that the data is identically distributed . Furthermore, assume that the null hypothesis will be rejected at the significance level

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the ...

of Since is large, one can approximate the t-distribution by a normal distribution and calculate the critical value

Critical value may refer to:

*In differential topology, a critical value of a differentiable function between differentiable manifolds is the image (value of) ƒ(''x'') in ''N'' of a critical point ''x'' in ''M''.

*In statistical hypothesis ...

using the quantile function , the inverse of the cumulative distribution function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x.

Ev ...

of the normal distribution. It turns out that the null hypothesis will be rejected if

Now suppose that the alternative hypothesis is true and . Then, the power is

For large , approximately follows a standard normal distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

:

f(x) = \frac e^

The parameter \mu ...

when the alternative hypothesis is true, the approximate power can be calculated as

According to this formula, the power increases with the values of the parameter For a specific value of a higher power may be obtained by increasing the sample size .

It is not possible to guarantee a sufficient large power for all values of as may be very close to 0. The minimum (infimum

In mathematics, the infimum (abbreviated inf; plural infima) of a subset S of a partially ordered set P is a greatest element in P that is less than or equal to each element of S, if such an element exists. Consequently, the term ''greatest lo ...

) value of the power is equal to the confidence level of the test, in this example 0.05. However, it is of no importance to distinguish between and small positive values. If it is desirable to have enough power, say at least 0.90, to detect values of the required sample size can be calculated approximately:

from which it follows that

Hence, using the quantile function

where is a standard normal quantile; refer to the Probit

In probability theory and statistics, the probit function is the quantile function associated with the standard normal distribution. It has applications in data analysis and machine learning, in particular exploratory statistical graphics and s ...

article for an explanation of the relationship between and z-values.

Extension

Bayesian power

In thefrequentist

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or pro ...

setting, parameters are assumed to have a specific value which is unlikely to be true. This issue can be addressed by assuming the parameter has a distribution. The resulting power is sometimes referred to as Bayesian power which is commonly used in clinical trial

Clinical trials are prospective biomedical or behavioral research studies on human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments (such as novel vaccines, drugs, diet ...

design.

Predictive probability of success

Bothfrequentist

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or pro ...

power and Bayesian power use statistical significance as the success criterion. However, statistical significance is often not enough to define success. To address this issue, the power concept can be extended to the concept of predictive probability of success (PPOS). The success criterion for PPOS is not restricted to statistical significance and is commonly used in clinical trial

Clinical trials are prospective biomedical or behavioral research studies on human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments (such as novel vaccines, drugs, diet ...

designs.

Software for power and sample size calculations

Numerous free and/or open source programs are available for performing power and sample size calculations. These include * G*Power (https://www.gpower.hhu.de/) * WebPower Free online statistical power analysis (https://webpower.psychstat.org) * Free and open source online calculators (https://powerandsamplesize.com) * PowerUp! provides convenient excel-based functions to determine minimum detectable effect size and minimum required sample size for various experimental and quasi-experimental designs. * PowerUpR is R package version of PowerUp! and additionally includes functions to determine sample size for various multilevel randomized experiments with or without budgetary constraints. * R package pwr * R package WebPower * Python package statsmodels (https://www.statsmodels.org/)See also

*Cohen's h

In statistics, Cohen's ''h'', popularized by Jacob Cohen, is a measure of distance between two proportions or probabilities. Cohen's ''h'' has several related uses:

* It can be used to describe the difference between two proportions as "small", ...

*Effect size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the ...

* Efficiency

*Neyman–Pearson lemma

In statistics, the Neyman–Pearson lemma was introduced by Jerzy Neyman and Egon Pearson in a paper in 1933. The Neyman-Pearson lemma is part of the Neyman-Pearson theory of statistical testing, which introduced concepts like errors of the seco ...

*Sample size

Sample size determination is the act of choosing the number of observations or replicates to include in a statistical sample. The sample size is an important feature of any empirical study in which the goal is to make inferences about a populatio ...

*Uniformly most powerful test

In statistical hypothesis testing, a uniformly most powerful (UMP) test is a hypothesis test which has the greatest power 1 - \beta among all possible tests of a given size ''α''. For example, according to the Neyman–Pearson lemma, the likelih ...

References

Sources

* *External links

* {{DEFAULTSORT:Statistical Power Statistical hypothesis testing